Been to any AI meetups or conferences recently? Multimodal AI is no longer a futuristic concept — it’s powering today’s most transformative applications. Beneath every smart system that sees, reads, and reasons lies a growing complexity of data orchestration, inference, indexing, search, and retrieval. That’s where ApertureDB AI Workflows come in: modular, flexible, and purpose-built for modern AI pipelines.

What Are Aperture AI Workflows?

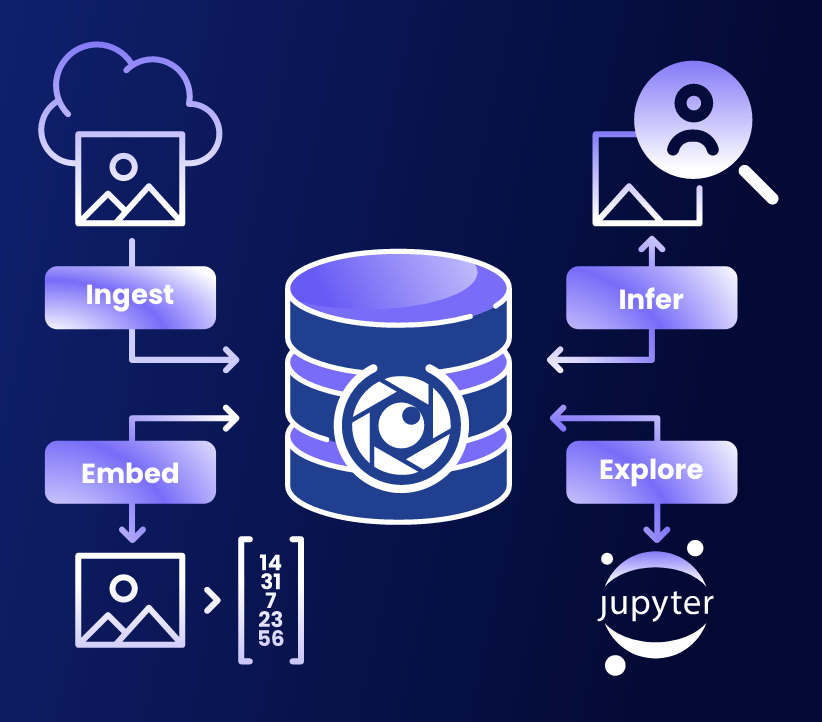

ApertureDB is the foundational data layer built for managing data from a variety of sources and multiple modalities ranging from tables to graphs to embeddings to text to videos. While it's easy to integrate ApertureDB with other software packages and create applications, AI Workflows take it a step further and allow you to create your applications by just placing blocks.

Aperture AI Workflows provide a way to automate the execution of a commonly-performed task, when managing multimodal datasets for AI. They are structured sequences of operations designed to solve common AI/ML tasks like multimodal data ingestion, search, data correlation, or metadata filtering, using graph, vector, and multimodal-native capabilities offered by ApertureDB paired sometimes with models or services provided by partner tools. These workflows are designed to be easy to use and can be customized to fit your needs.

Depending on its goal, an AI workflow can include:

- Data ingestion and linking across modalities (text, image, video, embedding, etc.)

- Preprocessing and embedding using user-defined or built-in models or functions

- Storage and indexing for retrieval and filtering

- Inference steps for enriching data, powered by connected models or APIs

- Query interfaces optimized for speed, semantic depth, or interoperability

Why Workflows?

If there’s one thing we’ve learned after countless conversations and onboarding experiences with developers, researchers, and product teams — it’s that users fall along a spectrum. Some want the granular control to build AI systems piece by piece. Others want plug-and-play solutions that deliver results out of the box.

Rather than force a one-size-fits-all approach, we launched AI Workflows to strike the perfect balance. They’re composable enough for deep customization (MLCommons Croissant ingestion anyone?), yet curated enough to accelerate time-to-value (have you tried our website to RAG workflow yet?).

In multimodal AI, coordination across a variety of data sources and modalities is paramount. Raw assets mean little unless they’re made interoperable and easily accessible. Workflows solve key pain points:

- Reduce engineering overhead in assembling complex ETL pipelines arising from such a mix of data types

- Ensure reproducibility across experiments or deployments as AI continues to evolve

- Unify graph and vector operations so systems can reason semantically and contextually

- Make complex logic explainable and adaptable

They're the glue between data, computation, and user intent. Workflows let us deliver foundational logic while leaving room for innovation that can then be built on top.

Why Workflows First Instead of Agents or Applications?

We deliberately chose workflows over standalone agents or prebuilt applications. Here’s why:

- Agents can be rigid and hard to repurpose for new modalities or data sources.

- Applications often mask the underlying logic, making them hard to extend.

- Workflows, by contrast, expose the building blocks — the data connections, inference steps, and modality-aware operations — while still delivering turnkey functionality.

In short, workflows let users assemble, modify, and deploy multimodal AI systems in the way that suits them best. Let’s just see what we build next now that these building blocks are available (hint - agents and applications!)

How Do ApertureDB Workflows Work?

There are three ways to deploy Aperture AI workflows:

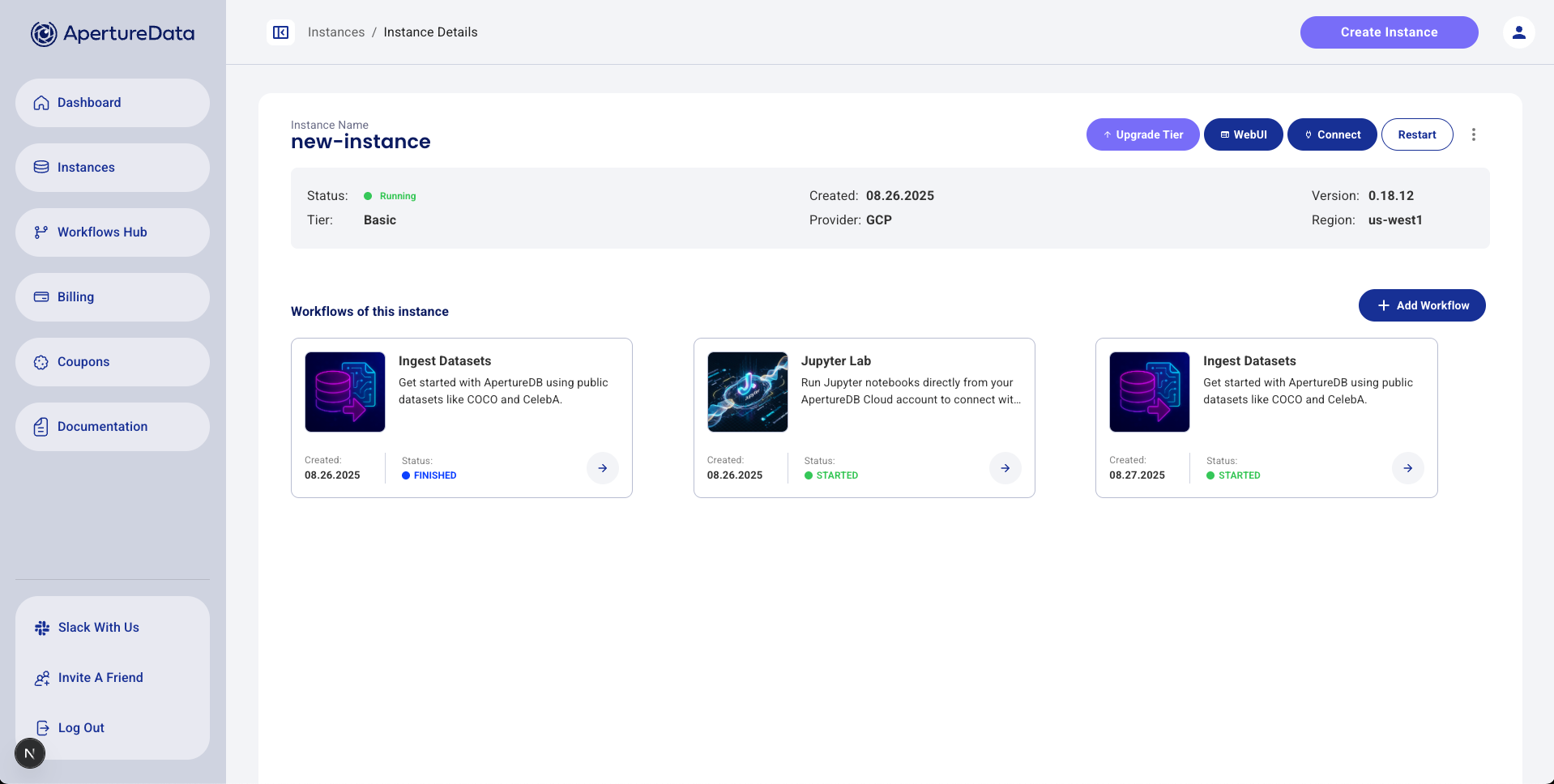

- Workflows can be invoked from ApertureDB Cloud with a few clicks. This is the easiest way to use them, but there are a couple of restrictions: Not all options are available, and they can only be used with cloud instances.

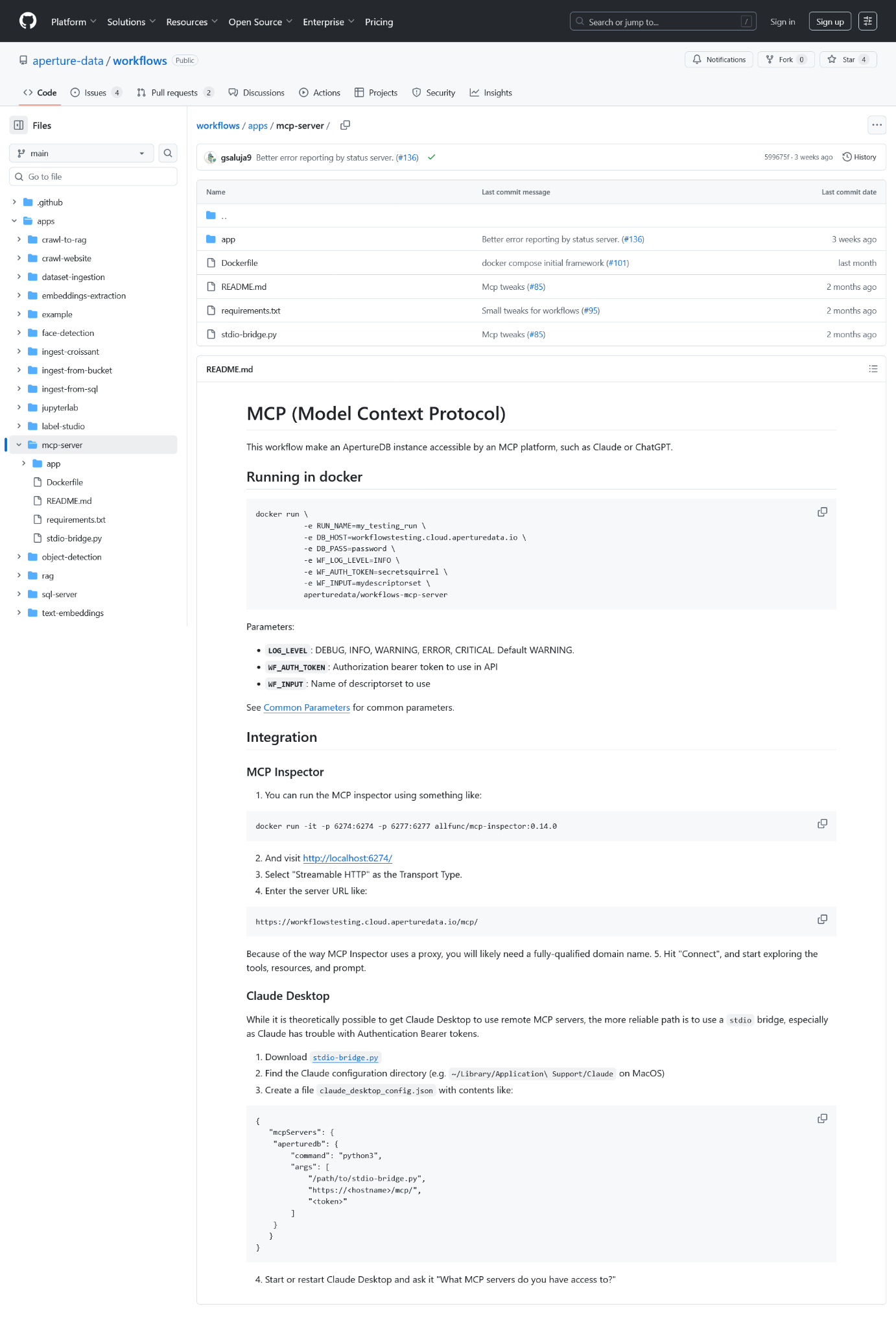

- All workflows are also provided as Docker images, so they can be run from the command line or via Kubernetes. This is slightly harder to use, but some additional options are available, and they can be used with any ApertureDB instance (think community edition if you are evaluating or a VPC instance).

- Workflows are implemented using Python, and are open source, so they can be modified to fit your needs, or reused in your own code. This is the most flexible way to use workflows, but it requires programming your own or working with the ApertureData team.

Depending on the purpose, workflows can be one-off e.g. ingestion; periodic or event-based e.g. embedding generation every time new data appears in the database; continuous e.g. Jupyter, SQL, or MCP server to query data in the database.

Workflows also provide a way for us to integrate with dataset and model providers like HuggingFace, OpenAI, Cohere, Twelve Labs, Together.ai, Groq, and others e.g. AIMon for RAG response monitoring and so on.

Available Workflows

We have been on a roll this year launching over a dozen workflows since the first one debuted in March 2025 (here is our product hunt announcement where we made it to top 10) and this is a list that will continue to grow. You can easily search for these workflows by looking at the Workflows Hub section in ApertureDB Cloud and install those that match your needs. Regardless of whether you are a cloud user or work with our community or enterprise edition in VPC, you can take advantage of workflows from our Github repository.

We like to categorize our current set of workflows into the following categories:

- Data ingestion: ingest with Croissant URL, ingestion from SQL tables, public dataset ingestion for quick start, ingestion from cloud buckets are all examples of simplifying multimodal data ingestion which is often the first step to building any AI application

- Enrichment: object or face detection, embedding generation are all examples of adding more information to the data already present in the database.

- Query: a Jupyter workflow to allow development, SQL server to query but also plug in BI dashboards, Label studio integration for labeling, MCP server, are all examples of workflows that offer ways for users to query their data

- Composite: of course, some workflows rely on others or combine a few different steps like crawl, segmentation, embedding, and RAG for a chatbot workflow; semantic search enabled on PDF documents requires ingestion of PDFs likely from cloud buckets followed by segmentation, embedding generation, and querying in the ApertureDB UI.

🎬 See our workflows in action—watch the demos here.

Can You Add New Workflows?

Absolutely. ApertureDB workflows are designed to be extensible. You can:

- Clone and modify existing workflows

- Define new types and relationships

- Integrate custom models at any step

- Create composite workflows from primitive workflows

We welcome community workflow contributions for fast prototyping and sharing on our Github repository. Our workflow codebase contains many reusable pieces which we’ve assembled, making an already comprehensive set, and allows a new workflow to be created in under a day.

What can you build with workflows ?

Workflows on ApertureDB allow you to go from simple search on multimodal data to complex and composite use cases like:

- Website Chatbot: Implement a continuous workflow that crawls web content, segments and embeds text, and deploys a RAG-based agent for real-time retrieval. Ideal for knowledge-centric bots that leverage ApertureDB’s unified vector and metadata store for contextual responses.

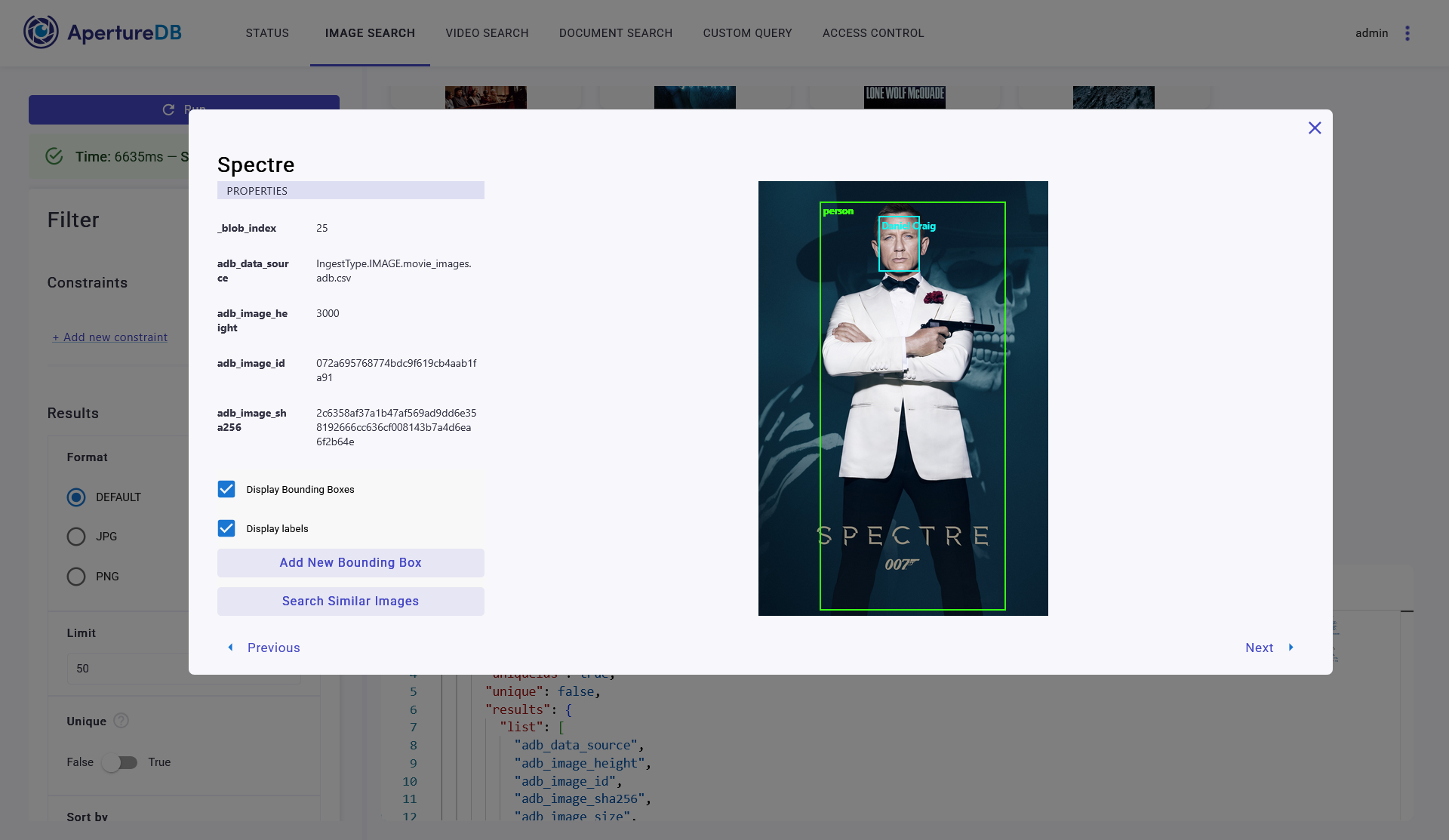

- Semantic Search: Use periodic workflows to ingest and embed multimodal data such as PDFs, images, video into ApertureDB and enable vector-based retrieval across modalities, supporting natural language queries and similarity search with graph-aware context propagation.

- Insights: Integrate structured and unstructured data pipelines into ApertureDB, enabling dashboards to query across embeddings, metadata, and temporal events through our SQL workflows. Supports real-time analytics and multimodal drill-downs for enterprise intelligence.

- Personalized Recommendations: Continuous workflows ingest and embed user interactions, product metadata, and visual content into ApertureDB. This enables real-time retrieval of similar items or behaviors across modalities—supporting recommendation engines that adapt to evolving user context.

- Customer Support Chat: Combine document ingestion, embedding generation, and RAG workflows to power chat interfaces that retrieve relevant multimodal knowledge (text, screenshots, videos). Agents can query ApertureDB for contextual answers, grounded in structured and unstructured support data.

- ML Training / Inference: ApertureDB workflows support dataset curation, annotation, and retrieval for training multimodal models. Inference pipelines can query embeddings, metadata, and ROIs directly, accelerating model deployment and evaluation with persistent, queryable memory. You can leverage object detection and face detection workflows to annotate bounding boxes and spatial metadata. Facilitates granular querying within visual assets, such as sub-image retrieval or ROI-based filtering in medical, satellite, or retail datasets.

- AI Agents: Continuous workflows like the MCP Server connect generative models to ApertureDB’s memory layer. Agents can persist context, retrieve multimodal evidence, and act on evolving datasets, enabling autonomous reasoning, long-horizon planning, and personalized interactions.

Whether you have your task already captured in a workflow or you need to figure out a new template, we have built enough examples to help you through the most complex multimodal AI applications. Just ping us on slack!

🔮 What’s Next?

AI isn’t slowing down, and neither are we. Some items on our near-term horizon:

- Real-time feedback loops for adaptive systems e.g. allowing users to iterate on segmentation logic for better RAG performance

- GraphRAG workflow

- Interactive workflow visualizations

- Deeper integration with agent frameworks and LLM stacks

- …user requests for partner integrations

Whether you're pioneering autonomous agents or scaling enterprise search, ApertureDB AI Workflows are your bridge to building multimodal intelligence that actually works, in the wild!

Improved with feedback from Drew Ogle, Sonam Gupta, Federico Navarro, Deniece Moxy

Images by Volodymyr Shostakovych, Senior Graphic Designer

.png)

.png)

.png)

.jpeg)

.png)